Learn extra at:

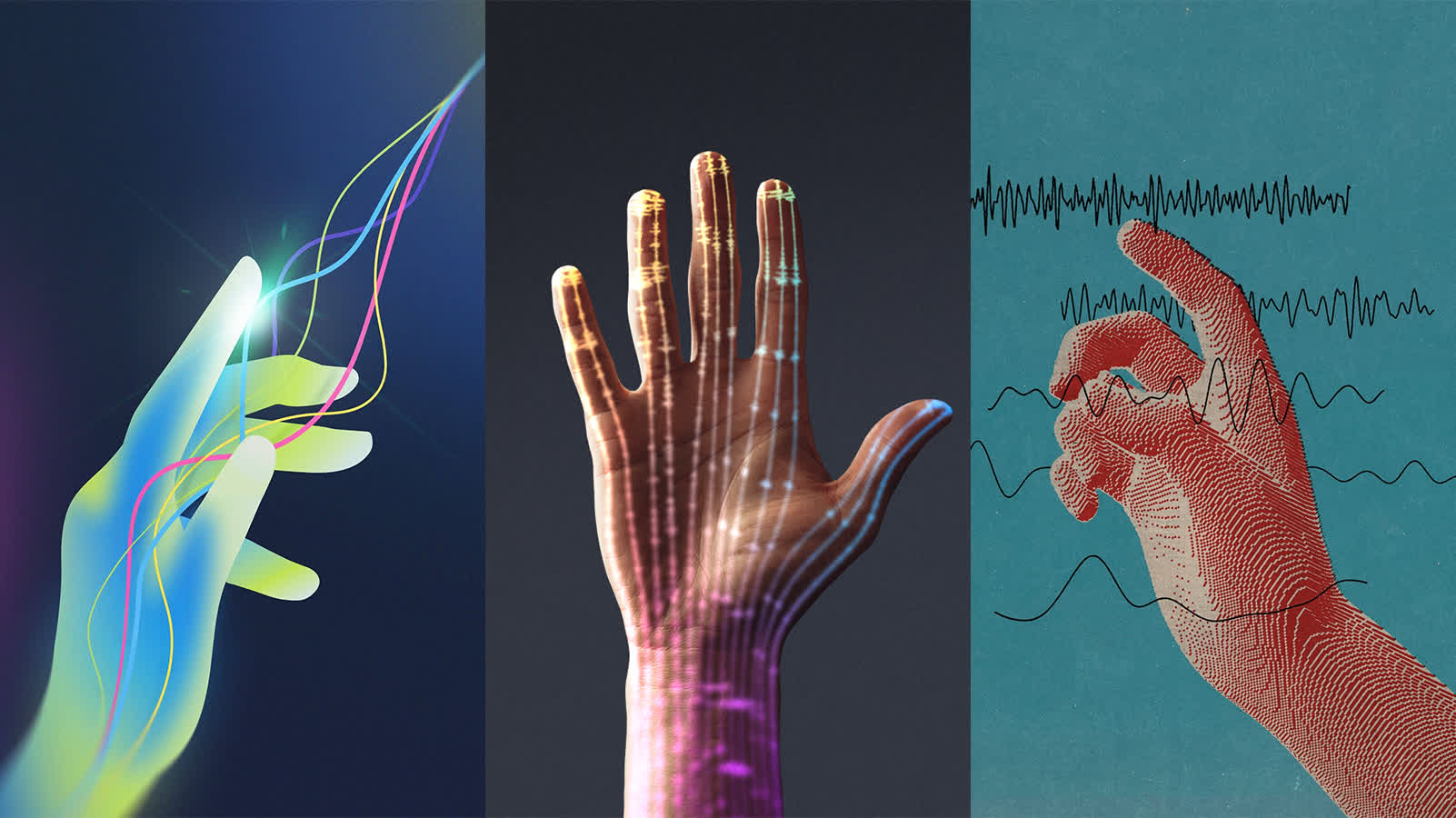

Ahead-looking: A brand new chapter in human-computer interplay is unfolding at Meta, the place researchers are exploring how the muscle groups in our arms may quickly take the place of conventional keyboards, mice, and touchscreens. At their Actuality Labs division, scientists have developed an experimental wristband that reads {the electrical} indicators produced when an individual intends to maneuver their fingers. This enables customers to manage digital units utilizing solely refined hand and wrist gestures.

This technology attracts on the sphere of electromyography, or EMG, which measures muscle exercise by detecting {the electrical} indicators generated because the mind sends instructions to muscle fibers.

Usually, these indicators have been utilized in medical settings, mainly for enabling amputees to manage prosthetic limbs. Meta’s work, nonetheless, rekindles decades-old concepts by leveraging synthetic intelligence to make EMG an intuitive interface for on a regular basis computing.

“You do not have to really transfer,” Thomas Reardon, Meta’s vp of analysis heading the challenge, says. “You simply must intend the transfer.”

Since experiments started, the prototype wristband has developed past clunky early visions. Now, by detecting faint electrical pulses earlier than any seen motion, the gadget permits actions like shifting a laptop computer cursor, opening apps, and even writing within the air and seeing the textual content seem immediately on a display screen.

“You do not have to really transfer,” Thomas Reardon, Meta’s vp of analysis heading the challenge, told The New York Occasions. “You simply must intend the transfer.” This distinction – the place intent is sufficient to set off motion – a leap ahead that would outpace the very fingers and arms we have lengthy relied upon.

In contrast to some rising neurotechnology approaches, Meta’s resolution is fully noninvasive. Efforts by startups like Neuralink and Synchron are targeted on brain-implanted or vascular units to learn ideas instantly, complicating adoption and elevating dangers. Meta sidesteps surgical procedure: anybody can fasten this wristband and start utilizing it, due to superior AI fashions educated on the muscle indicators of hundreds of volunteers.

“The breakthrough right here is that Meta has used synthetic intelligence to research very giant quantities of information, from hundreds of people, and make this expertise strong. It now performs at a degree it has by no means reached earlier than,” Dario Farina, a bioengineering professor at Imperial School, London, who has examined the system, informed The New York Occasions.

With EMG information from 10,000 folks, Meta engineered deep studying fashions able to decoding intentions and gestures with out user-specific coaching.

Patrick Kaifosh, director of analysis science at Actuality Labs and a co-founder of Ctrl Labs, which was acquired by Meta in 2019, defined that “out of the field, it could work with a brand new consumer it has by no means seen information for.” The identical AI foundations that help this wristband additionally drive progress in handwriting recognition and gesture management, liberating customers from bodily keyboards and screens.

Reardon credit years of regular analysis, beginning at Ctrl Labs, for bringing the expertise to its present degree. “We will see {the electrical} sign earlier than your finger even strikes,” he mentioned. “We will take heed to a single neuron. We’re working on the atomic degree of the nervous system,” he mentioned.

Past serving able-bodied customers, the expertise reveals promise for these with restricted mobility. At Carnegie Mellon College, researchers are already evaluating the wristband with individuals who have spinal wire accidents, permitting them to function computer systems and smartphones regardless of impaired hand perform. The system’s capability to choose up faint traces of intention – typically earlier than any seen motion – brings hope to those that have misplaced conventional motor skills. “We will see their intention to kind,” mentioned Douglas Weber, professor of mechanical engineering and neuroscience at Carnegie Mellon.

Meta goals to fold this expertise into client merchandise within the coming years, envisioning an period when on a regular basis duties – from typing to sending messages – are carried out invisibly, with the flick of a muscle or the mere intent to behave.

The corporate’s latest research printed in Nature stories that with continued AI personalization, accuracy in decoding gestures can exceed that of earlier approaches, even maintaining with fast muscle instructions. The analysis additionally described a public dataset containing over 100 hours of EMG recordings, inviting the scientific neighborhood to construct on their findings and speed up progress in neuromotor interfaces.

Reardon, widely known because the architect of Internet Explorer, now sees the problem as making “expertise strong and accessible to everybody.” He provides: “It feels just like the gadget is studying your thoughts, however it’s not. It’s simply translating your intention. It sees what you’re about to do.”